MLRun Package Documentation¶

The Open-Source MLOps Orchestration Framework

MLRun is an open-source MLOps framework that offers an integrative approach to managing your machine-learning pipelines from early development through model development to full pipeline deployment in production. MLRun offers a convenient abstraction layer to a wide variety of technology stacks while empowering data engineers and data scientists to define the feature and models.

The MLRun Architecture¶

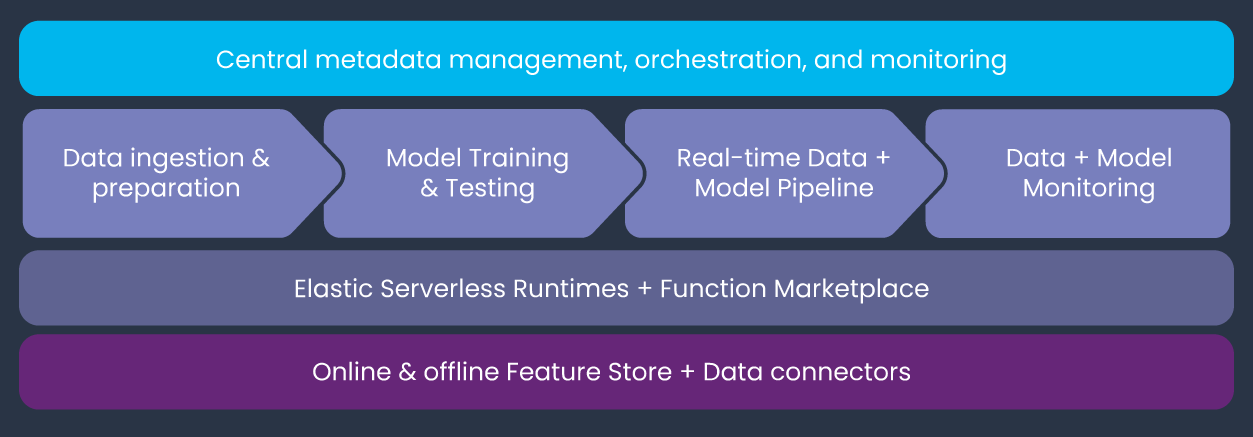

MLRun is composed of the following layers:

- Feature and Artifact Store

Handles the ingestion, processing, metadata, and storage of data and features across multiple repositories and technologies.

- Elastic Serverless Runtimes

Converts simple code to scalable and managed microservices with workload-specific runtime engines (such as Kubernetes jobs, Nuclio, Dask, Spark, and Horovod).

- ML Pipeline Automation

Automates data preparation, model training and testing, deployment of real-time production pipelines, and end-to-end monitoring.

- Central Management

Provides a unified portal for managing the entire MLOps workflow. The portal includes a UI, a CLI, and an SDK, which are accessible from anywhere.

Key Benefits¶

MLRun provides the following key benefits:

Rapid deployment of code to production pipelines

Elastic scaling of batch and real-time workloads

Feature management – ingestion, preparation, and monitoring

Works anywhere – your local IDE, multi-cloud, or on-prem

Table Of Contents¶

MLRun basics

Concepts

Working with data

Develop functions and models

Deploy ML applications