Projects and automated ML pipeline

Contents

Projects and automated ML pipeline#

This notebook demonstrate how to work with projects, source control (git), and automating the ML pipeline.

Make sure you went over the basics in MLRun quick-start-tutor.

MLRun Project is a container for all your work on a particular activity: all the associated code, functions,

Jobs, workflows, data, models and Artifacts. Projects can be mapped to git repositories to enable versioning, collaboration, and CI/CD.

You can create project definitions using the SDK or a yaml file and store those in MLRun DB, file, or archive. Once the project is loaded you can run jobs/workflows which refer to any project element by name, allowing separation between configuration and code. See Create and load projects for details.

Projects contain workflows that execute the registered functions in a sequence/graph (DAG), and which can reference project parameters, secrets and artifacts by name. MLRun currently supports two workflow engines, local (for simple tasks) and Kubeflow Pipelines (for more complex/advanced tasks). MLRun also supports a real-time workflow engine (see Real-time serving pipelines (graphs)).

An ML Engineer can gather the different functions created by the Data Engineer and Data Scientist and create this automated pipeline.

Tutorial steps:

MLRun installation and configuration#

Before running this notebook make sure the mlrun package is installed (pip install mlrun) and that you have configured the access to MLRun service.

# install MLRun if not installed, run this only once (restart the notebook after the install !!!)

# %pip install mlrun

Setup the project and functions#

Get or create a project:

There are three ways to create/load {ref}MLRun projects <Projects>:

mlrun.projects.new_project()— Create a new MLRun project and optionally load it from a yaml/zip/git template.mlrun.projects.load_project()— Load a project from a context directory or remote git/zip/tar archive.mlrun.projects.get_or_create_project()— Load a project from the MLRun DB if it exists, or from a specified context/archive.

Projects refer to a context directory that holds all the project code and configuration. The context dir is

usually mapped to a git repository and/or to an IDE (PyCharm, VSCode, etc.) project.

import mlrun

project = mlrun.get_or_create_project("tutorial", context="src/", user_project=True)

> 2022-08-24 08:59:21,761 [info] loaded project tutorial from None or context and saved in MLRun DB

Register project functions#

To run workflows, you must save the definitions for the functions in the project so function objects will be initialized automatically when you load a project or when running a project version in automated CI/CD workflows. In addition, you might want to set/register other project attributes such as global parameters, secrets, and data.

Functions are registered using the set_function() command, where you can specify the code, requirements, image, etc. Functions can be created from a single code/notebook file or have access to the entire project context directory (by adding the with_repo=True flag, it will guarantee the project context is cloned into the function runtime environment).

Function registration examples:

# example: register a notebook file as a function

project.set_function('mynb.ipynb', name='test-function', image="mlrun/mlrun", handler="run_test")

# define a job (batch) function which uses code/libs from the project repo

project.set_function(

name="myjob", handler="my_module.job_handler",

image="mlrun/mlrun", kind="job", with_repo=True,

)

Function code can be found here

Register the function above in the project:

project.set_function("gen_breast_cancer.py", name="get-data", kind="job", image="mlrun/mlrun", handler="breast_cancer_generator")

<mlrun.runtimes.kubejob.KubejobRuntime at 0x7fc278e12340>

Register additional project objects and metadata:

You can define other objects (workflows, artifacts, secrets) and parameters in the project and use them in your functions, for example:

# register a simple named artifact in the project (to be used in workflows)

data_url = 'https://s3.wasabisys.com/iguazio/data/iris/iris.data.raw.csv'

project.set_artifact('data', target_path=data_url)

# add a multi-stage workflow (./workflow.py) to the project with the name 'main' and save the project

project.set_workflow('main', "./workflow.py")

# read env vars from dict or file and set as project secrets

project.set_secrets({"SECRET1": "value"})

project.set_secrets(file_path="secrets.env")

project.spec.params = {"x": 5}

Save the project:

# save the project in the db (and into the project.yaml file)

project.save()

<mlrun.projects.project.MlrunProject at 0x7fc278e18880>

When you save the project it stores the project definitions in the project.yaml, this allows reconstructing the project in a remote cluster or a CI/CD system.

See the generated project file: project.yaml.

Working with GIT and archives#

Push the project code/metadata into an Archive#

Use standard Git commands to push the current project tree into a git archive, make sure you .save() the project before pushing it

git remote add origin <server>

git commit -m "Commit message"

git push origin master

Alternatively you can use MLRun SDK calls:

project.create_remote(git_uri, branch=branch)- to register the remote Git pathproject.push()- save project state and commit/push updates to remote git repo

you can also save the project content and metadata into a local or remote .zip archive, examples:

project.export("../archive1.zip")

project.export("s3://my-bucket/archive1.zip")

project.export(f"v3io://projects/{project.name}/archive1.zip")

Load a project from local/remote archive#

The project metadata and context (code and configuration) can be loaded and initialized using the load_project() method.

when url (of the git/zip/tar) is specified it clones a remote repo into the local context dir.

# load the project and run the 'main' workflow

project = load_project(context="./", name="myproj", url="git://github.com/mlrun/project-archive.git")

project.run("main", arguments={'data': data_url})

Projects can also be loaded and executed using the CLI:

mlrun project -n myproj -u "git://github.com/mlrun/project-archive.git" .

mlrun project -r main -w -a data=<data-url> .

# load the project in the current context dir

project = mlrun.load_project("src/")

Build and run automated ML pipelines and CI/CD#

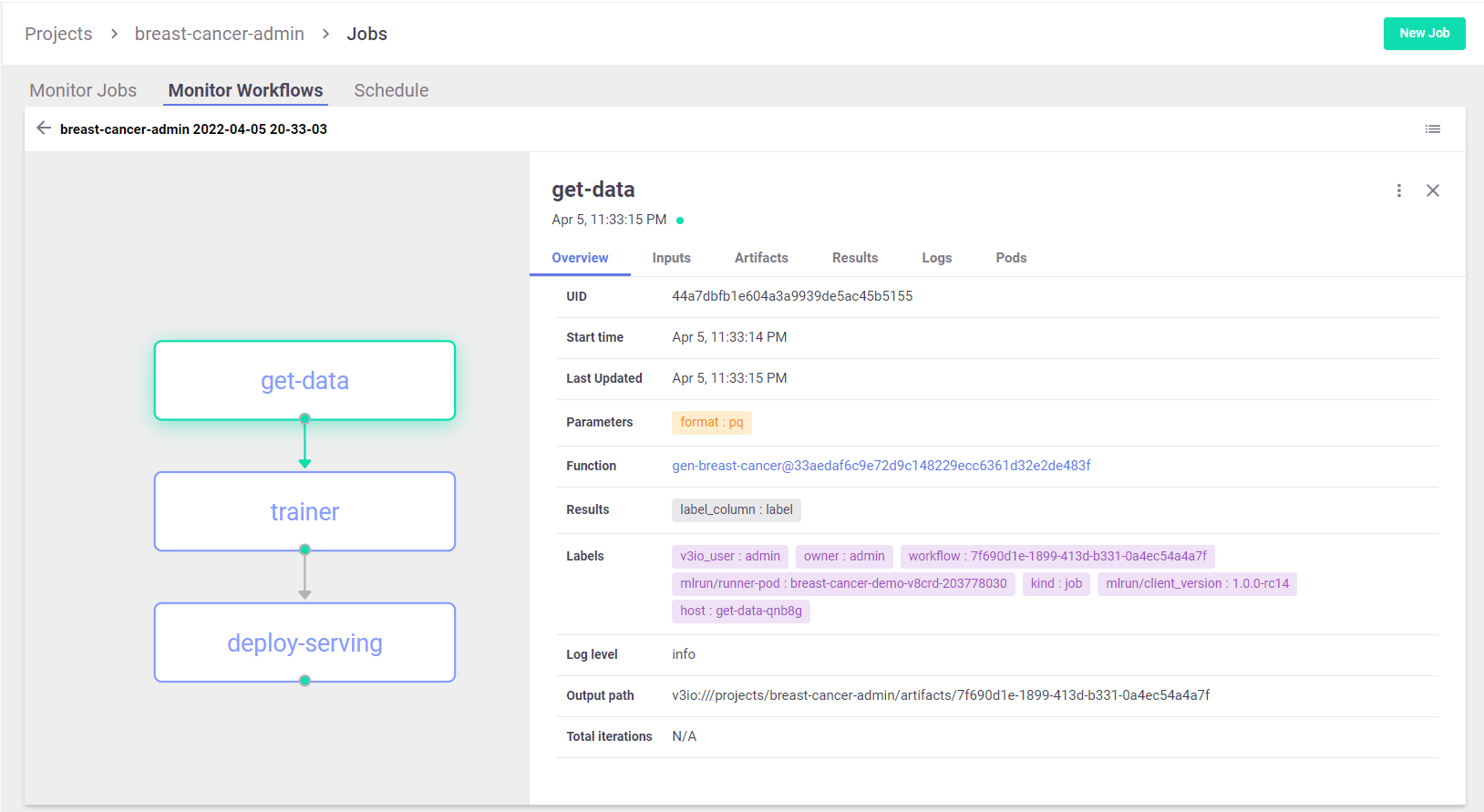

A pipeline is created by running an MLRun “workflow”.

The following code defines a workflow.

The workflow describes a directed acyclic graph (DAG) which is executed using the local, remote, or kubeflow engines.

See Workflows. The defined pipeline includes the following steps:

Generate/prepare the data (

ingest).Train and the model (

train).Deploy the model as a real-time serverless function (

serving).

Note: A pipeline can also include continuous build integration and deployment (CI/CD) steps, such as building container images and deploying models.

Run the workflow:

# run the workflow

run_id = project.run(

workflow_path="workflow.py",

arguments={"model_name": "cancer-classifier"},

watch=True)

Run Results

Workflow 892a0851-ac9f-45a6-b405-2b19628b8892 finished, state=Succeededclick the hyper links below to see detailed results

| uid | start | state | name | parameters | results |

|---|---|---|---|---|---|

| Aug 24 09:00:07 | completed | trainer | accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

||

| Aug 24 08:59:39 | completed | get-data | format=pq model_name=cancer-classifier |

label_column=label |

View the pipeline in MLRun UI:

Run workflows using the CLI:

With MLRun you can use a single command to load the code from local dir or remote archive (Git, zip, …) and execute a pipeline. This can be very useful for integration with CI/CD frameworks and practices. See CI/CD, rolling upgrades, git for more details.

The following command loads the project from the current dir (.) and executes the workflow with an argument, for running locally (without k8s).

mlrun project -r ./workflow.py -w -a model_name=classifier2 .!mlrun project -r ./workflow.py -w -a model_name=classifier2 .

Test the deployed model endpoint#

Now that your model is deployed using the pipeline, you can invoke it as usual:

serving_fn = project.get_function("serving")

# create a mock (simulator of the real-time function)

my_data = {"inputs"

:[[

1.371e+01, 2.083e+01, 9.020e+01, 5.779e+02, 1.189e-01, 1.645e-01,

9.366e-02, 5.985e-02, 2.196e-01, 7.451e-02, 5.835e-01, 1.377e+00,

3.856e+00, 5.096e+01, 8.805e-03, 3.029e-02, 2.488e-02, 1.448e-02,

1.486e-02, 5.412e-03, 1.706e+01, 2.814e+01, 1.106e+02, 8.970e+02,

1.654e-01, 3.682e-01, 2.678e-01, 1.556e-01, 3.196e-01, 1.151e-01]

]

}

serving_fn.invoke("/v2/models/cancer-classifier/infer", body=my_data)

> 2022-08-24 09:06:42,914 [info] invoking function: {'method': 'POST', 'path': 'http://nuclio-tutorial-jovyan-serving.mlrun.svc.cluster.local:8080/v2/models/cancer-classifier/infer'}

{'id': '9558b3a9-30c0-49e5-a55a-d5defd8d38e8',

'model_name': 'cancer-classifier',

'outputs': [0]}

Done!#

Congratulations! You’ve completed the getting started tutorial.

You might also want to explore the following demos:

For an example of distributed training pipeline using TensorFlow, Keras, and PyTorch, see the mask detection demo.

To learn more about deploying live endpoints and concept drift, see the network-operations (NetOps) demo.

To learn about using the feature store to process raw transactions and events in real-time and respond and block transactions before they occur, see the Fraud prevention demo.

For an example of a pipeline that summarizes and extracts keywords from a news article URL, see the News article summarization and keyword extraction via NLP.