Train, compare, and register models#

This notebook provides a quick overview of training ML models using MLRun MLOps orchestration framework.

Make sure you reviewed the basics in MLRun Quick Start Tutorial.

Tutorial steps:

MLRun installation and configuration#

Before running this notebook make sure mlrun and sklearn packages are installed (pip install mlrun scikit-learn~=1.3) and that you have configured the access to the MLRun service.

# Install MLRun if not installed, run this only once (restart the notebook after the install !!!)

%pip install mlrun

Define MLRun project and a training functions#

You should create, load, or use (get) an MLRun project that holds all your functions and assets.

Get or create a new project

The get_or_create_project() method tries to load the project from MLRun DB. If the project does not exist, it creates a new one.

import mlrun

project = mlrun.get_or_create_project("tutorial", context="./", user_project=True)

> 2022-09-20 13:55:10,543 [info] loaded project tutorial from None or context and saved in MLRun DB

Add (auto) MLOps to your training function

Training functions generate models and various model statistics. You'll want to store the models along with all the relevant data,

metadata, and measurements. MLRun can apply all the MLOps functionality automatically ("Auto-MLOps") by simply using the framework-specific apply_mlrun() method.

This is the line to add to your code, as shown in the training function below.

apply_mlrun(model=model, model_name="my_model", x_test=x_test, y_test=y_test)

apply_mlrun() manages the training process and automatically logs all the framework-specific model object, details, data, metadata, and metrics.

It accepts the model object and various optional parameters. When specifying the x_test and y_test data it generates various plots and calculations to evaluate the model.

Metadata and parameters are automatically recorded (from MLRun context object) and therefore don't need to be specified.

Function code

Run the following cell to generate the trainer.py file (or copy it manually):

%%writefile trainer.py

import pandas as pd

from sklearn import ensemble

from sklearn.model_selection import train_test_split

import mlrun

from mlrun.frameworks.sklearn import apply_mlrun

@mlrun.handler()

def train(

dataset: pd.DataFrame,

label_column: str = "label",

n_estimators: int = 100,

learning_rate: float = 0.1,

max_depth: int = 3,

model_name: str = "cancer_classifier",

):

# Initialize the x & y data

x = dataset.drop(label_column, axis=1)

y = dataset[label_column]

# Train/Test split the dataset

x_train, x_test, y_train, y_test = train_test_split(

x, y, test_size=0.2, random_state=42

)

# Pick an ideal ML model

model = ensemble.GradientBoostingClassifier(

n_estimators=n_estimators, learning_rate=learning_rate, max_depth=max_depth

)

# -------------------- The only line you need to add for MLOps -------------------------

# Wraps the model with MLOps (test set is provided for analysis & accuracy measurements)

apply_mlrun(model=model, model_name=model_name, x_test=x_test, y_test=y_test)

# --------------------------------------------------------------------------------------

# Train the model

model.fit(x_train, y_train)

Overwriting trainer.py

Create a serverless function object from the code above, and register it in the project

trainer = project.set_function(

"trainer.py", name="trainer", kind="job", image="mlrun/mlrun", handler="train"

)

Run the training function and log the artifacts and model#

Create a dataset for training

import pandas as pd

from sklearn.datasets import load_breast_cancer

breast_cancer = load_breast_cancer()

breast_cancer_dataset = pd.DataFrame(

data=breast_cancer.data, columns=breast_cancer.feature_names

)

breast_cancer_labels = pd.DataFrame(data=breast_cancer.target, columns=["label"])

breast_cancer_dataset = pd.concat([breast_cancer_dataset, breast_cancer_labels], axis=1)

breast_cancer_dataset.to_csv("cancer-dataset.csv", index=False)

Run the function (locally) using the generated dataset

trainer_run = project.run_function(

"trainer",

inputs={"dataset": "cancer-dataset.csv"},

params={"n_estimators": 100, "learning_rate": 1e-1, "max_depth": 3},

local=True,

)

> 2022-09-20 13:56:57,630 [info] starting run trainer-train uid=b3f1bc3379324767bee22f44942b96e4 DB=http://mlrun-api:8080

| project | uid | iter | start | state | name | labels | inputs | parameters | results | artifacts |

|---|---|---|---|---|---|---|---|---|---|---|

| tutorial-iguazio | 0 | Sep 20 13:56:57 | completed | trainer-train | v3io_user=iguazio kind= owner=iguazio host=jupyter-5654cb444f-c9wk2 |

dataset |

n_estimators=100 learning_rate=0.1 max_depth=3 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

feature-importance test_set confusion-matrix roc-curves calibration-curve model |

> 2022-09-20 13:56:59,356 [info] run executed, status=completed

View the auto generated results and artifacts

trainer_run.outputs

{'accuracy': 0.956140350877193,

'f1_score': 0.965034965034965,

'precision_score': 0.9583333333333334,

'recall_score': 0.971830985915493,

'feature-importance': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/feature-importance.html',

'test_set': 'store://artifacts/tutorial-iguazio/trainer-train_test_set:b3f1bc3379324767bee22f44942b96e4',

'confusion-matrix': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/confusion-matrix.html',

'roc-curves': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/roc-curves.html',

'calibration-curve': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/calibration-curve.html',

'model': 'store://artifacts/tutorial-iguazio/cancer_classifier:b3f1bc3379324767bee22f44942b96e4'}

trainer_run.artifact("feature-importance").show()

Export model files + metadata into a zip (requires MLRun 1.1.0 and later)

You can export() the model package (files + metadata) into a zip, and load it on a remote system/cluster by running model = project.import_artifact(key, path)).

trainer_run.artifact("model").meta.export("model.zip")

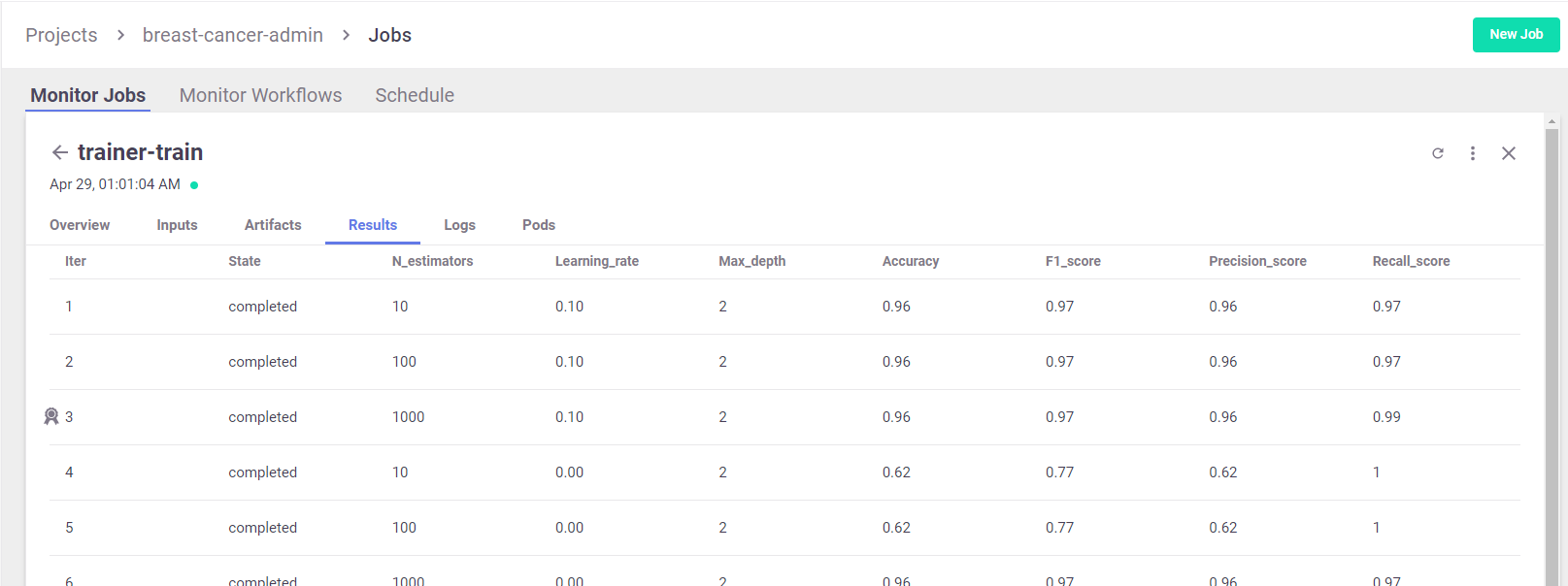

Hyper-parameter tuning and model/experiment comparison#

Run a GridSearch with a couple of parameters, and select the best run with respect to the max accuracy.

(For more details, see MLRun Hyper-Param and Iterative jobs.)

For basic usage you can run the hyperparameters tuning job by using the arguments:

hyperparamsfor the hyperparameters options and values of choice.selectorfor specifying how to select the best model.

Running a remote function

To run the hyper-param task over the cluster you need the input data to be available for the job, using object storage or the MLRun versioned artifact store.

The following line logs (and uploads) the dataframe as a project artifact:

dataset_artifact = project.log_dataset(

"cancer-dataset", df=breast_cancer_dataset, index=False

)

Run the function over the remote Kubernetes cluster (local is not set):

hp_tuning_run = project.run_function(

"trainer",

inputs={"dataset": dataset_artifact.uri},

hyperparams={

"n_estimators": [10, 100, 1000],

"learning_rate": [1e-1, 1e-3],

"max_depth": [2, 8],

},

selector="max.accuracy",

)

> 2022-09-20 13:57:28,217 [info] starting run trainer-train uid=b7696b221a174f66979be01138797f19 DB=http://mlrun-api:8080

> 2022-09-20 13:57:28,365 [info] Job is running in the background, pod: trainer-train-xfzfp

Matplotlib created a temporary config/cache directory at /tmp/matplotlib-zuih5pkq because the default path (/.config/matplotlib) is not a writable directory; it is highly recommended to set the MPLCONFIGDIR environment variable to a writable directory, in particular to speed up the import of Matplotlib and to better support multiprocessing.

> 2022-09-20 13:58:07,356 [info] best iteration=3, used criteria max.accuracy

> 2022-09-20 13:58:07,750 [info] run executed, status=completed

final state: completed

| project | uid | iter | start | state | name | labels | inputs | parameters | results | artifacts |

|---|---|---|---|---|---|---|---|---|---|---|

| tutorial-iguazio | 0 | Sep 20 13:57:33 | completed | trainer-train | v3io_user=iguazio kind=job owner=iguazio mlrun/client_version=1.1.0 |

dataset |

best_iteration=3 accuracy=0.9649122807017544 f1_score=0.9722222222222222 precision_score=0.958904109589041 recall_score=0.9859154929577465 |

feature-importance test_set confusion-matrix roc-curves calibration-curve model iteration_results parallel_coordinates |

> 2022-09-20 13:58:13,925 [info] run executed, status=completed

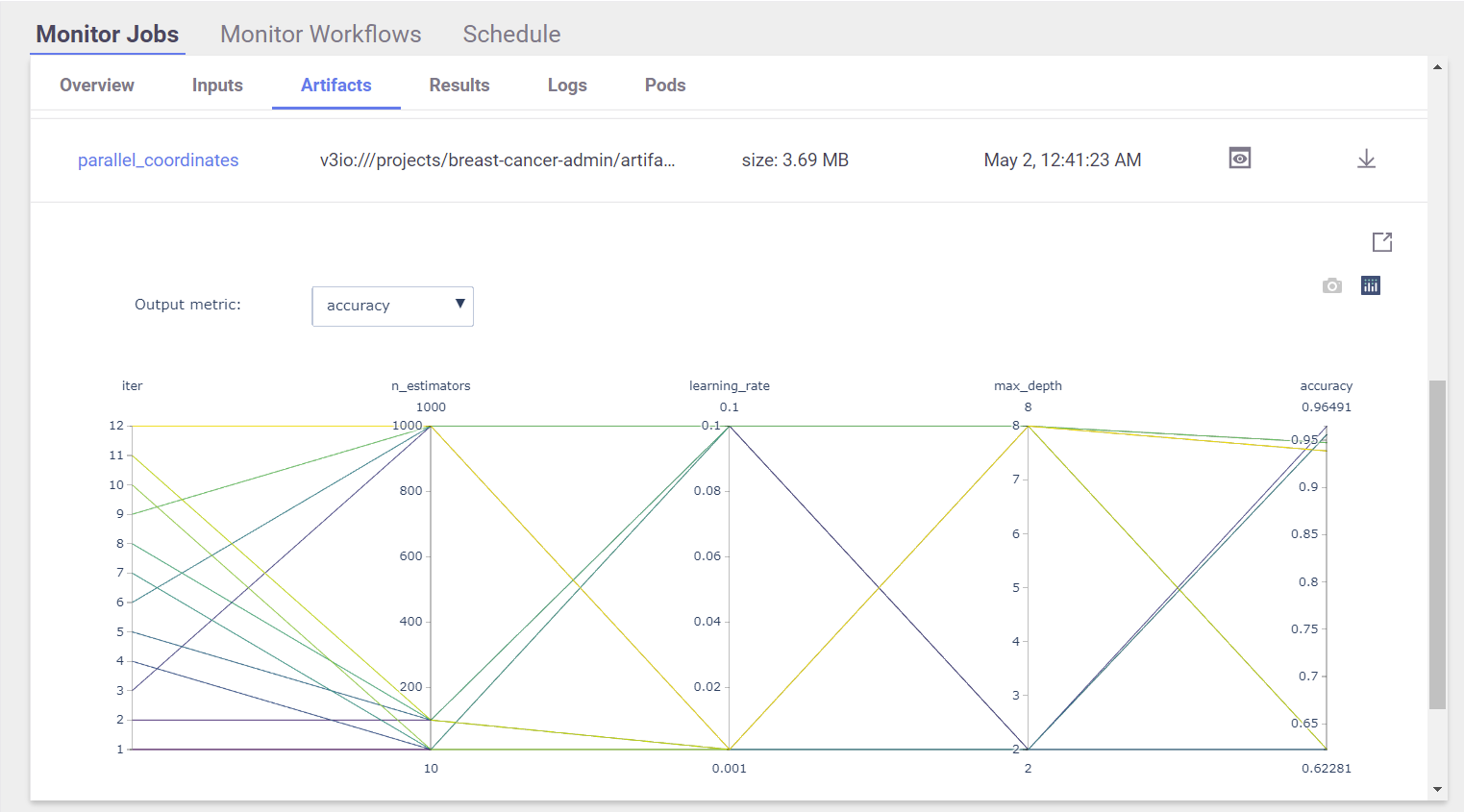

View Hyper-param results and the selected run in the MLRun UI

Interactive Parallel Coordinates Plot

List the generated models and compare the different runs

hp_tuning_run.outputs

{'best_iteration': 3,

'accuracy': 0.9649122807017544,

'f1_score': 0.9722222222222222,

'precision_score': 0.958904109589041,

'recall_score': 0.9859154929577465,

'feature-importance': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/3/feature-importance.html',

'test_set': 'store://artifacts/tutorial-iguazio/trainer-train_test_set:b7696b221a174f66979be01138797f19',

'confusion-matrix': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/3/confusion-matrix.html',

'roc-curves': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/3/roc-curves.html',

'calibration-curve': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/3/calibration-curve.html',

'model': 'store://artifacts/tutorial-iguazio/cancer_classifier:b7696b221a174f66979be01138797f19',

'iteration_results': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/iteration_results.csv',

'parallel_coordinates': 'v3io:///projects/tutorial-iguazio/artifacts/trainer-train/0/parallel_coordinates.html'}

# List the models in the project (can apply filters)

models = project.list_models()

for model in models:

print(f"uri: {model.uri}, metrics: {model.metrics}")

Show code cell output

uri: store://models/tutorial-iguazio/cancer_classifier#0:b3f1bc3379324767bee22f44942b96e4, metrics: {'accuracy': 0.956140350877193, 'f1_score': 0.965034965034965, 'precision_score': 0.9583333333333334, 'recall_score': 0.971830985915493}

uri: store://models/tutorial-iguazio/cancer_classifier#1:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.956140350877193, 'f1_score': 0.965034965034965, 'precision_score': 0.9583333333333334, 'recall_score': 0.971830985915493}

uri: store://models/tutorial-iguazio/cancer_classifier#2:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.956140350877193, 'f1_score': 0.965034965034965, 'precision_score': 0.9583333333333334, 'recall_score': 0.971830985915493}

uri: store://models/tutorial-iguazio/cancer_classifier#3:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.9649122807017544, 'f1_score': 0.9722222222222222, 'precision_score': 0.958904109589041, 'recall_score': 0.9859154929577465}

uri: store://models/tutorial-iguazio/cancer_classifier#4:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.6228070175438597, 'f1_score': 0.7675675675675676, 'precision_score': 0.6228070175438597, 'recall_score': 1.0}

uri: store://models/tutorial-iguazio/cancer_classifier#5:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.6228070175438597, 'f1_score': 0.7675675675675676, 'precision_score': 0.6228070175438597, 'recall_score': 1.0}

uri: store://models/tutorial-iguazio/cancer_classifier#6:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.956140350877193, 'f1_score': 0.965034965034965, 'precision_score': 0.9583333333333334, 'recall_score': 0.971830985915493}

uri: store://models/tutorial-iguazio/cancer_classifier#7:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.9385964912280702, 'f1_score': 0.951048951048951, 'precision_score': 0.9444444444444444, 'recall_score': 0.9577464788732394}

uri: store://models/tutorial-iguazio/cancer_classifier#8:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.9473684210526315, 'f1_score': 0.9577464788732394, 'precision_score': 0.9577464788732394, 'recall_score': 0.9577464788732394}

uri: store://models/tutorial-iguazio/cancer_classifier#9:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.9473684210526315, 'f1_score': 0.9577464788732394, 'precision_score': 0.9577464788732394, 'recall_score': 0.9577464788732394}

uri: store://models/tutorial-iguazio/cancer_classifier#10:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.6228070175438597, 'f1_score': 0.7675675675675676, 'precision_score': 0.6228070175438597, 'recall_score': 1.0}

uri: store://models/tutorial-iguazio/cancer_classifier#11:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.6228070175438597, 'f1_score': 0.7675675675675676, 'precision_score': 0.6228070175438597, 'recall_score': 1.0}

uri: store://models/tutorial-iguazio/cancer_classifier#12:b7696b221a174f66979be01138797f19, metrics: {'accuracy': 0.9385964912280702, 'f1_score': 0.951048951048951, 'precision_score': 0.9444444444444444, 'recall_score': 0.9577464788732394}

# To view the full model object use:

# print(models[0].to_yaml())

# Compare the runs (generate interactive parallel coordinates plot and a table)

project.list_runs(name="trainer-train", iter=True).compare()

Show code cell output

| uid | iter | start | state | name | parameters | results |

|---|---|---|---|---|---|---|

| 12 | Sep 20 13:57:59 | completed | trainer-train | n_estimators=1000 learning_rate=0.001 max_depth=8 |

accuracy=0.9385964912280702 f1_score=0.951048951048951 precision_score=0.9444444444444444 recall_score=0.9577464788732394 |

|

| 11 | Sep 20 13:57:57 | completed | trainer-train | n_estimators=100 learning_rate=0.001 max_depth=8 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 10 | Sep 20 13:57:55 | completed | trainer-train | n_estimators=10 learning_rate=0.001 max_depth=8 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 9 | Sep 20 13:57:53 | completed | trainer-train | n_estimators=1000 learning_rate=0.1 max_depth=8 |

accuracy=0.9473684210526315 f1_score=0.9577464788732394 precision_score=0.9577464788732394 recall_score=0.9577464788732394 |

|

| 8 | Sep 20 13:57:51 | completed | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=8 |

accuracy=0.9473684210526315 f1_score=0.9577464788732394 precision_score=0.9577464788732394 recall_score=0.9577464788732394 |

|

| 7 | Sep 20 13:57:50 | completed | trainer-train | n_estimators=10 learning_rate=0.1 max_depth=8 |

accuracy=0.9385964912280702 f1_score=0.951048951048951 precision_score=0.9444444444444444 recall_score=0.9577464788732394 |

|

| 6 | Sep 20 13:57:45 | completed | trainer-train | n_estimators=1000 learning_rate=0.001 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 5 | Sep 20 13:57:43 | completed | trainer-train | n_estimators=100 learning_rate=0.001 max_depth=2 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 4 | Sep 20 13:57:42 | completed | trainer-train | n_estimators=10 learning_rate=0.001 max_depth=2 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 3 | Sep 20 13:57:38 | completed | trainer-train | n_estimators=1000 learning_rate=0.1 max_depth=2 |

accuracy=0.9649122807017544 f1_score=0.9722222222222222 precision_score=0.958904109589041 recall_score=0.9859154929577465 |

|

| 2 | Sep 20 13:57:36 | completed | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 1 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=10 learning_rate=0.1 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 1 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=10 learning_rate=0.1 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 2 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 3 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=1000 learning_rate=0.1 max_depth=2 |

accuracy=0.9649122807017544 f1_score=0.9722222222222222 precision_score=0.958904109589041 recall_score=0.9859154929577465 |

|

| 4 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=10 learning_rate=0.001 max_depth=2 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 5 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=100 learning_rate=0.001 max_depth=2 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 6 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=1000 learning_rate=0.001 max_depth=2 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 7 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=10 learning_rate=0.1 max_depth=8 |

accuracy=0.9385964912280702 f1_score=0.951048951048951 precision_score=0.9444444444444444 recall_score=0.9577464788732394 |

|

| 8 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=8 |

accuracy=0.9473684210526315 f1_score=0.9577464788732394 precision_score=0.9577464788732394 recall_score=0.9577464788732394 |

|

| 9 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=1000 learning_rate=0.1 max_depth=8 |

accuracy=0.9473684210526315 f1_score=0.9577464788732394 precision_score=0.9577464788732394 recall_score=0.9577464788732394 |

|

| 10 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=10 learning_rate=0.001 max_depth=8 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 11 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=100 learning_rate=0.001 max_depth=8 |

accuracy=0.6228070175438597 f1_score=0.7675675675675676 precision_score=0.6228070175438597 recall_score=1.0 |

|

| 12 | Sep 20 13:57:33 | completed | trainer-train | n_estimators=1000 learning_rate=0.001 max_depth=8 |

accuracy=0.9385964912280702 f1_score=0.951048951048951 precision_score=0.9444444444444444 recall_score=0.9577464788732394 |

|

| 0 | Sep 20 13:56:57 | completed | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=3 |

accuracy=0.956140350877193 f1_score=0.965034965034965 precision_score=0.9583333333333334 recall_score=0.971830985915493 |

|

| 0 | Sep 20 13:56:16 | error |

trainer-train | n_estimators=100 learning_rate=0.1 max_depth=3 |

||

| 0 | Sep 20 13:56:03 | error |

trainer-train | n_estimators=100 learning_rate=0.1 max_depth=3 |

||

| 0 | Sep 20 13:55:25 | running | trainer-train | n_estimators=100 learning_rate=0.1 max_depth=3 |

Build and test the model serving functions#

MLRun serving can produce managed, real-time, serverless, pipelines composed of various data processing and ML tasks. The pipelines use the Nuclio real-time serverless engine, which can be deployed anywhere. For more details and examples, see the MLRun Serving Graphs.

Create a model serving function from your code, and (view it here)

serving_fn = mlrun.new_function("serving", image="mlrun/mlrun", kind="serving")

serving_fn.add_model(

"cancer-classifier",

model_path=hp_tuning_run.outputs["model"],

class_name="mlrun.frameworks.sklearn.SklearnModelServer",

)

<mlrun.serving.states.TaskStep at 0x7feb1f55faf0>

# Create a mock (simulator of the real-time function)

server = serving_fn.to_mock_server()

my_data = {

"inputs": [

[

1.371e01,

2.083e01,

9.020e01,

5.779e02,

1.189e-01,

1.645e-01,

9.366e-02,

5.985e-02,

2.196e-01,

7.451e-02,

5.835e-01,

1.377e00,

3.856e00,

5.096e01,

8.805e-03,

3.029e-02,

2.488e-02,

1.448e-02,

1.486e-02,

5.412e-03,

1.706e01,

2.814e01,

1.106e02,

8.970e02,

1.654e-01,

3.682e-01,

2.678e-01,

1.556e-01,

3.196e-01,

1.151e-01,

]

]

}

server.test("/v2/models/cancer-classifier/infer", body=my_data)

> 2022-09-20 14:12:35,714 [warning] run command, file or code were not specified

> 2022-09-20 14:12:35,859 [info] model cancer-classifier was loaded

> 2022-09-20 14:12:35,860 [info] Loaded ['cancer-classifier']

/conda/envs/mlrun-extended/lib/python3.8/site-packages/sklearn/base.py:450: UserWarning:

X does not have valid feature names, but GradientBoostingClassifier was fitted with feature names

{'id': 'd9aee47cadc042ebbd9474ec0179a446',

'model_name': 'cancer-classifier',

'outputs': [0]}

Done!#

Congratulation! You've completed Part 2 of the MLRun getting-started tutorial. Proceed to Part 3: Model serving to learn how to deploy and serve your model using a serverless function.